Building a Strong Foundation: Essential Skills for Data Engineering Professionals

As someone deeply immersed in the field of data engineering, I understand the importance of having a strong foundation of skills to succeed in this dynamic and fast-paced industry. In this blog post, I’ll discuss the essential skills that every data engineering professional should possess, offering insights and guidance for those looking to embark on a career in this field.

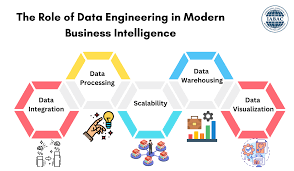

Understanding the Role of Data Engineering

Before diving into the essential skills, it’s crucial to understand the role of data engineering within the broader landscape of data science and analytics. Data engineering involves designing, building, and maintaining the infrastructure and architecture necessary for the efficient and reliable processing of large volumes of data.

Key Takeaways:

– Developing a strong foundation of skills is essential for success in data engineering, including proficiency in programming languages, data modeling, ETL processes, big data technologies, cloud computing platforms, and data pipeline orchestration.

– Continuous learning and professional development are key to staying abreast of emerging technologies and best practices in the field of data engineering.

– Collaboration with peers, participation in online communities, and hands-on experience with real-world projects are valuable opportunities for honing and expanding your skills as a data engineering professional.

Essential Skills for Data Engineering Professionals

I’ll outline the key skills that aspiring data engineering professionals should focus on developing to excel in their careers.

1. Proficiency in Programming Languages:

– Python : Python is widely used in data engineering for tasks such as data manipulation, scripting, and automation.

– SQL : SQL (Structured Query Language) is essential for querying, manipulating, and managing data stored in relational databases.

2. Data Modeling and Database Design:

– Understanding data modeling concepts and techniques, such as entity-relationship modeling and dimensional modeling, is crucial for designing efficient and scalable databases.

Data modeling is the process of designing the structure of databases to efficiently store and organize data. Data engineers need to understand various data modeling techniques, such as entity-relationship modeling and dimensional modeling, to design databases that meet the needs of their organization’s data analysis and reporting requirements. This involves defining data entities, relationships between them, and the attributes of each entity. Additionally, data engineers must have a solid understanding of database design principles, such as normalization and denormalization, to ensure optimal performance and scalability of database systems.

3. ETL (Extract, Transform, Load) Processes:

– Data engineers should be proficient in designing and implementing ETL processes to extract data from various sources, transform it into a usable format, and load it into data warehouses or data lakes.

ETL processes are fundamental to data engineering, as they involve extracting data from various sources, transforming it into a usable format, and loading it into data warehouses or data lakes for analysis. Data engineers must be proficient in designing and implementing ETL workflows using tools and technologies such as Apache Spark, Apache Kafka, Talend, or Informatica. This includes tasks such as data cleansing, data validation, data enrichment, and data aggregation, to ensure that the data is accurate, consistent, and ready for analysis.

4. Big Data Technologies:

– Familiarity with big data technologies such as Apache Hadoop, Spark, and Kafka is essential for processing and analyzing large volumes of data efficiently.

With the exponential growth of data volumes, data engineers must be familiar with big data technologies that enable the processing and analysis of large datasets. This includes distributed computing frameworks like Apache Hadoop and Apache Spark, which allow data engineers to process and analyze data in parallel across clusters of servers. Additionally, data engineers should be knowledgeable about technologies for storing and querying big data, such as Hadoop Distributed File System (HDFS) and Apache Hive.

5. Cloud Computing Platforms:

– Knowledge of cloud computing platforms like AWS, Azure, and Google Cloud is increasingly important, as many organizations are migrating their data infrastructure to the cloud for scalability and flexibility.

6. Data Pipeline Orchestration:

– Data engineers should be skilled in orchestrating complex data pipelines using tools like Apache Airflow or Luigi to schedule, monitor, and manage data workflows.

Data engineers are responsible for orchestrating complex data pipelines that automate the flow of data from source to destination. This involves scheduling, monitoring, and managing data workflows to ensure that data is processed and delivered in a timely manner. Data pipeline orchestration tools, such as Apache Airflow, Luigi, or Apache NiFi, allow data engineers to define, execute, and monitor data pipelines as directed acyclic graphs (DAGs). This enables them to schedule tasks, handle dependencies, and manage workflow execution in a scalable and fault-tolerant manner.

7. Data Visualization and Reporting:

– While not always a primary responsibility, data engineers should have a basic understanding of data visualization and reporting tools to communicate insights effectively to stakeholders.

Building a strong foundation of skills is essential for success in the field of data engineering. By mastering key concepts and technologies, data engineering professionals can unlock new opportunities and drive innovation in their organizations.

Building a strong foundation of skills is essential for aspiring data engineering professionals looking to excel in their careers. By mastering key concepts and technologies, continuously learning and adapting to new developments in the field, and seeking out opportunities for hands-on experience and collaboration, data engineers can position themselves for success in the dynamic and rapidly evolving world of data engineering.

FAQ

Practice coding regularly, work on real-world projects, and take online courses or tutorials to learn programming languages such as Python and SQL. Additionally, participate in coding challenges and collaborate with peers to enhance your skills.

While a background in computer science, engineering, or a related field can be beneficial, it’s not always necessary. Many successful data engineers come from diverse educational backgrounds and acquire the necessary skills through self-study, online courses, and hands-on experience.

Cloud computing expertise is becoming increasingly important for data engineers, as more organizations migrate their data infrastructure to the cloud. Familiarity with cloud platforms like AWS, Azure, and Google Cloud can open up new career opportunities and enable data engineers to build scalable and cost-effective data solutions.

Related Posts

Top 10 Programming Languages to Learn in 2024

Explore the economic impact of AI in healthcare through cost savings, revenue generation opportunities, and improved patient experiences.

Evaluating the Economic Impact of AI in Healthcare

Explore the economic impact of AI in healthcare through cost savings, revenue generation opportunities, and improved patient experiences.

Tracking Team India in Cricket World Cup T20 2024

Together, let’s cheer, support, and celebrate the spirit of cricket as Team India blazes a trail towards victory in the Cricket World Cup T20 2024.

T20 Turf Talk: Daily Dose of Cricket World Cup 2024 Updates

Cricket World Cup 2024 schedule: Discover the complete schedule for Cricket World Cup 2024.